If government is designing our brave new 'sharing by default' health system world with the patient at the centre, how does ChatGPT Health fit into all those big, complex, government controlled, expensive, centralised, and slow burn plans?

If you’ve deigned to read yet another Op Ed about AI and health – yawn – this isn’t actually one.

That would be because I don’t understand much if anything about it, and in particular I have no clue how it will actually impact how we do health in this country, other than to say, I’m pretty sure the impact is not going to be small.

A quick hopefully relevant story.

Back in about 2002 (ish), a particularly bright internet analyst we had – he had once worked on the UK nuclear submarine software program – stopped me in the hallway of the business I once ran and said something like:

“Hey, you know Google?”.

I did of course and I loved it even way back then, but only in an old media way of thinking – we can do research for stories much better than Yahoo.

We were a media company, making nearly all of our money in print magazines, so Google, which had started life in a small uni lab as a PhD thesis called BackRub, in 1995, but had launched to the public in 1998 was nowhere near top of mind for business ideas for us bright sparks.

“Well…..” then he said a whole raft of internet architect stuff I didn’t understand for a about five minutes ….”so I think if I can do this with all our websites and the mass of product data on them, Google will send us a cheque”.

Me: “What?” …”for how much…?”

Jack (not his real name): “no idea”

Me: “how much time and money do you need”

Jack: “not sure, how about $10k”

Me: “cool…give it a whirl”

Old internet timers like me may have recognised that Jack was talking to me about Ad Sense, which sounds today like I must have been an idiot not to have known about it, but back then, not many people were using it and not many people did know about it (I was an idiot for other reasons BTW).

Anyway, Jack did a bit of programming, connected our sites up (we had lots even then for each mag) and we got our first cheque (not actually a paper cheque) from Google the following week.

I was bug eyed. It wasn’t for a lot, but the upside was immediately obvious.

Very long story short: within six weeks we’d paid off our $10,000 investment and within a few years the entire business had pivoted around B2B data (we had lots of it stored to make paper magazines out of it) and the internet.

By 2009, a $40 million B2B print magazine company was a $90 million company, operating in 38 countries with over 120 websites.

Google was our beating heart business model, and we helped our thousands of clients get up to speed on it much faster than they may have themselves. Much faster. Which is why we did much better than any other old paper based B2B publisher at the time.

Main thing – we facilitated our clients doing business so much more efficiently.

I didn’t see Google coming in the manner it ended up coming at us, so this article isn’t about to criticise the government and the Australian Digital Health Agency for not contemplating more what AI is very likely to do to their plans to make sure patients finally have agency in a system which currently stores all their important information in silos that none of them can get at.

And which they plan to enhance, but basically still govern and control.

If you look at the two key planning documents of the ADHA – The National Digital Health Strategy 2023-28 and its accompanying Roadmap – AI is contemplated quite a bit, but in a very conservative, and I’m going to suggest now, given what is going on around us all, an overly constrained way.

A curt summary might be, “we see it, we’re thinking about it we promise, we think it will be important (yay), but we need to do all this stuff first, and only then we can engage with it in a meaningful way that will help patients”.

There’s some logic to the Agency’s thinking here: AI can’t help until interoperable, high-quality data exists.

But I’m going to suggest that this is flawed logic.

It’s based on a, not entirely bad, obsession, and focus, with fixing up a very old siloed system of health data storage and sharing, with some increasingly old, but seemingly new, technology.

Things are going so much faster than this thinking is.

Quick anecdote, maybe relevant though: back in 2002 our legacy systems in publishing were hilariously more legacy than our current set of silos for data in health – things like hospital EMRs, GP and specialist patient management platforms, the major health booking engines and so on forever. No, these are pretty well set up and sophisticated data stores compared to what we had back in a dinosaur publishing outfit making magazines mainly in 2002.

But it took us three weeks to expose a lot of that data to Google and start making money for us and our clients – way back then.

When I think about things like Best Practice, EPIC, HotDoc, HealthEngine and the My Health Record in this light, I’m actually super excited about what might be coming. As siloed and disorganised as all these stores of patient data are, compared to what we had to start with in 2002 and what we did, these systems are pretty amazing, and I’m going to suggest, in the right circumstances, accessible, especially to a patient who wants their data out of any of them.

I’m pretty worried that we aren’t stepping back enough and looking at what is actually going on here though

AI doesn’t care about where the data is and how hard it is for healthcare professionals to get at it at the right time and place.

And neither will patients.

Patients and their interest in their own data is the key – so not wrong there government and ADHA – but AI is about to hand patients a tool that is going to incentivise a lot of patients to just go and get the data they need from wherever it actually is and process it.

AI is handing patients the power if they want to use it.

Most of us in our hearts know it’s already being used by patients very effectively to improve their healthcare journey.

Who doesn’t know someone who has questioned their healthcare provider on ChatGPT or Claude and ended up significantly altering the path of their care for the better?

The first thing I’m going to do with ChatGPT Health when I go to my GP next and get an imaging report or bloods is ask ChatGPT what they think and what I maybe should be discussing with my GP or anyone else relevant to what is going on.

Admission: I still haven’t signed up properly to My Health Record.

Every time I’ve tried I give up because it’s too hard for the time I have to give it. It’s not easy to use, useful or fun.

That’s not going to happen with ChatGPT Health though and my pathology results.

And if I have something serious, do you think I’m going to trust my GP and the specialist I get sent to?

Yes and no.

Yes, I’ll instinctually sense if they know what they are doing. It’s their domain, they are front and centre who I’d love to be working with as a patient with a problem.

No, I’ll also ask AI what it thinks as a back-up (classic second opinion here less the time and money hassle) and to give me some more positional power in my conversation with the once all information powerful doctor and hospital.

Before anyone starts, I’ve already been subject to a barrage of criticism for this line of thinking:

- Chat GPT has already killed a few people with its hallucinations – it needs guardrails and lots of governance.

- It needs the right information at the right time.

- It’s just an upgrade of Dr Google. Healthcare professionals must always be overseeing things at every point of the care cycle as a sense check.

- … and so on.

To each of these points: patients don’t care so don’t worry about thinking it.

Don’tworry too much about all that hallucination, guardrail and governance stuff either. This is now a runaway train which we have to do our best to hang on to and do what we can with it when we can.

I read an interesting piece last night from a very respected health system consultant about how AI could be like the well-meaning engineer who put lead in petrol to make the combustion process much better for everyone.

This piece was saying, quite rightly, that we need to frame AI how patients and the system want to use it, so commissioning is a vital ongoing process for everyone in this brave new world.

Good logical thinking yes – a few weeks ago maybe. Now ChatGPT Health is up and Claude Health is coming. The train is running away at speed, a lot of our patients are on board already, and we can’t stop it.

We need to get on board somehow and help them with it.

I’m in no way suggesting that doctors and other healthcare professionals aren’t a completely vital part of healthcare that need to stay firmly in the guiding position in this loop. They are and I’m pretty sure they will.

I’m just suggesting that the context in which we are thinking how important healthcare information is surfaced and flows in a manner optimal for the patient – which is what we are all aiming at right? – has changed seismically in the last year or so, and we’re being a bit slow to recognise that and adjust what we are doing.

Like Google or not (for the record, from the very beginning I hated what Google was doing, is doing and will still do, and then what all the big platforms have all done and will do) AI isn’t stopping for government, for “proper governance”, for “guardrails”, for a bit of common sense.

If and when we do end up getting these things going – which we probably will – they’ll likely be out of date or dysfunctional ideas that hamper better information flow to patients, not enhance it.

We’re in a new paradigm operating and building out “sharing by default” in what is now an old paradigm – one which we rightly have been trying to transform with traditional methods.

None of us – government and ADHA included – are at fault for this or stupid for being where we are.

AI and Health AI are an industrial revolution transformation type event, but coming at us at light speed, not steam engine speed.

And it’s not like we haven’t been contemplating a lot of it so far – administration efficiency, coding, decision support (yep, we should go there quickly), data analysis, scribing, consulting notes, in IHIO Innovations Corporation in the US, prescribing now, and so on.

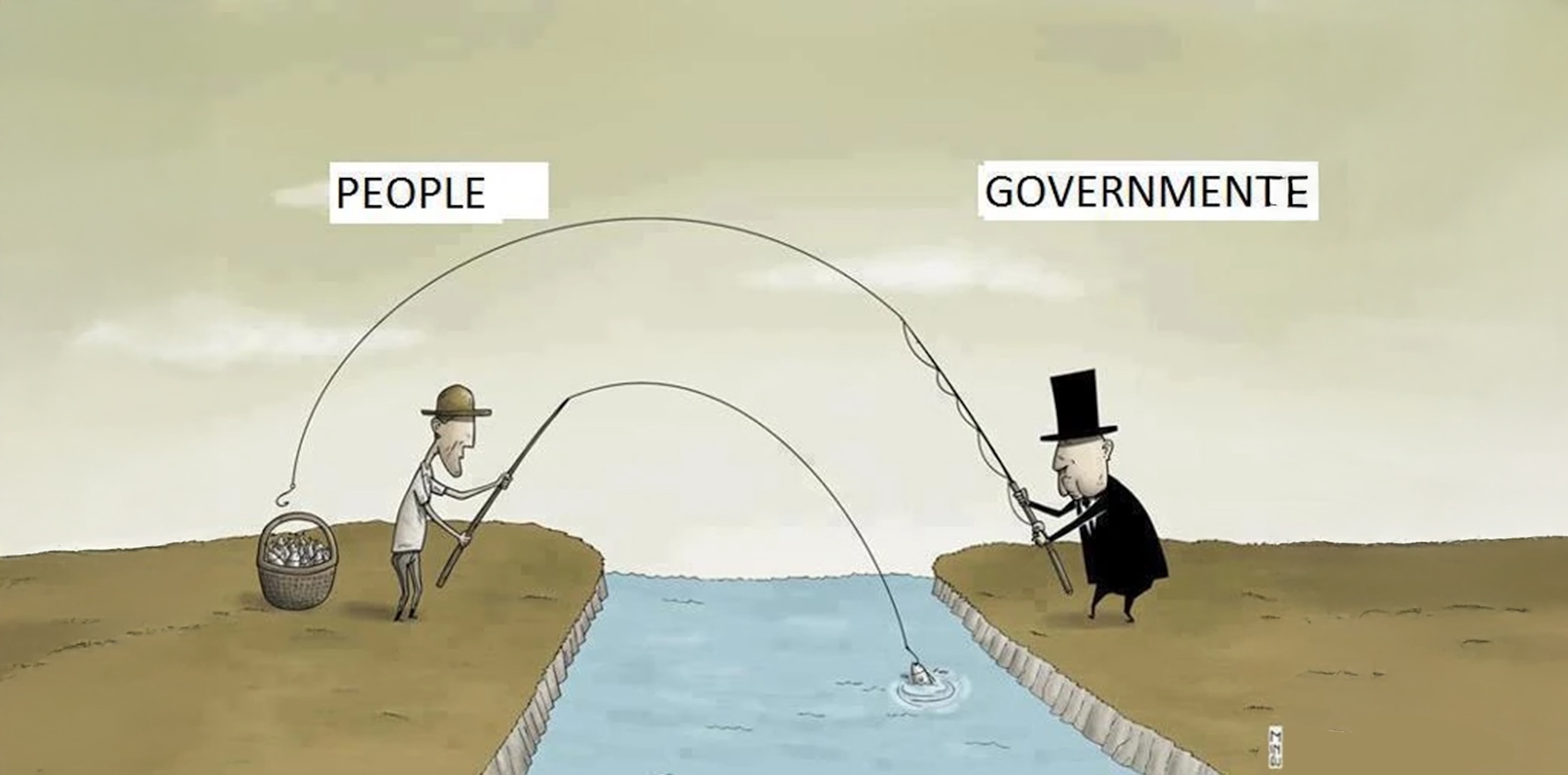

But we’re mostly all doing it from the provider side of the fence, where healthcare professionals are the top of the tree and government regulation, policy and infrastructure are close behind.

Where the doctor and the system are the controllers of the patient data, even if we’d love patients to be in control of it somehow.

It’s the old paradigm: the one where the government has set out on, what once we might have seen as the admirable goal of having a patient’s healthcare data follow them wherever they go.

What this new world is contemplating and might enable if we had a strategy around it, somehow embraced it and certainly don’t try to wrangle or control it to hard would be, the patients just have their data (i.e., they don’t have to follow it, they have it).

We quickly need to get on the patient side and start understanding how ChatGPT Health, Claude Health, and the others as they roll them out, are going to affect patient behaviour, so we understand much better how to work with this technology, and dove tail into it as quickly and effectively as we possibly can.

Remember, currently government and ADHA policy and strategy is build interoperability and better data first…then joining to AI.

Government is charged with doing what its name suggests after all, rule stuff, govern it.

It’s going to be very counter intuitive to being in government so will take creative leadership.

Government and the Agency are going to need to let go a bit.

Not control everything.

If we keep trying to rule, control and govern this new beast we are going to waste a lot of money and time.

That paradigm isn’t going to work now.

We need to open our minds and be more creative in how we empower patients in this new world.

We need to potentially contemplate that all that complexity in healthcare data silos we are currently trying to get on top of using what we thought was the latest technology could easily be redundant.

That the new platform for sharing isn’t one the government controls.

It’s one that patients ultimately control.

It’s one – and I don’t like this remember – that the AI vendors are facilitating.

It’s one that we now need to seriously contemplate using as our platform and making all those inaccessible data bases with poorly coded data, accessible.

Fast.

That’s if we really actually believe that patients should be at the centre of things and we want to make our healthcare system a lot better overall.